Event-Driven Visual-Tactile Sensing and Learning for Robots

Many everyday tasks require multiple sensory modalities to perform successfully. For example, consider fetching a carton of soymilk from the fridge; humans use vision to locate the carton and can infer from a simple grasp how much liquid the carton contains. This inference is performed robustly using a power-efficient neural substrate — compared to current artificial systems, human brains require far less energy.

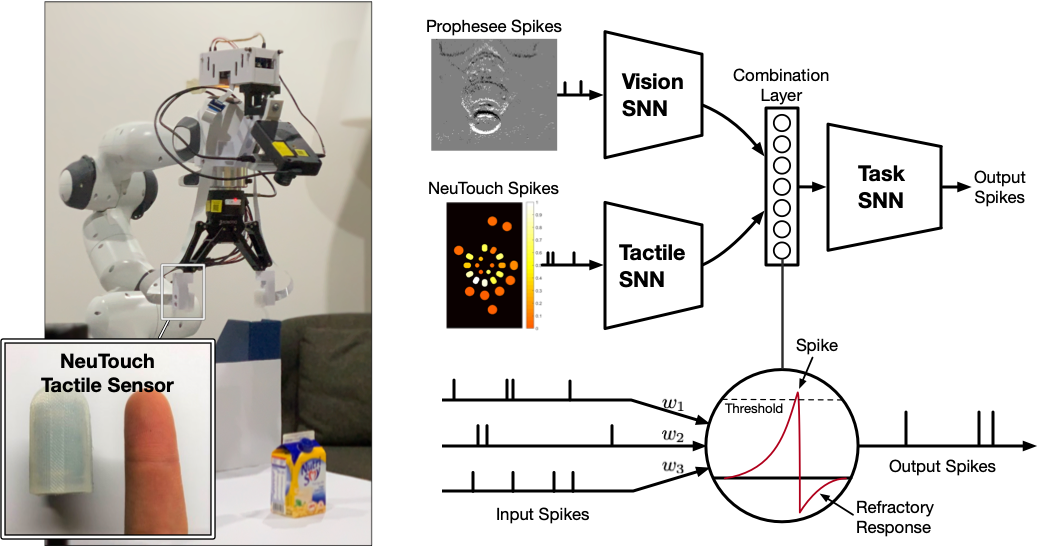

In this work, we gain inspiration from biological systems, which are asynchronous and event-driven. We contribute an event-driven visual-tactile perception system, comprising NeuTouch — a biologically-inspired tactile sensor — and the VT-SNN for multi-modal spike-based perception.

We evaluate our visual-tactile system (using the NeuTouch and Prophesee event camera) on two robot tasks: container classification and rotational slip detection. We show that relatively small differences in weight (approx. 30g across 20 object-weight classes) can be distinguished by our prototype sensors and spiking models. The second experiment indicates rotational slip can be accurately detected within 0.08s. When tested on the Intel Loihi, the SNN achieved inference speeds similar to a GPU, but required an order-of-magnitude less power.

For more on this work, please look at the following resources:

If you build upon our results and ideas, please use this citation.

@article{taunyazov2020event,

title = {Event-driven visual-tactile sensing and learning for robots},

author = {Taunyazov, Tasbolat and Sng, Weicong and See, Hian Hian and Lim, Brian and Kuan, Jethro and Ansari, Abdul Fatir and Tee, Benjamin CK and Soh, Harold},

journal = {Robotics: Science and Systems (RSS)},

year = {2020},

}